SHREE LEARNING ACADEMY

Operating System | Computer Hardware - Processors

We already know that operating system bridges the gap between the hardware and the user but to do this job the operating system also needs to know the different hardware installed into the system so let us just quickly grasp some knowledge on computer hardware so that in the later chapters we can learn how the operating system manages them.

Introduction

CPU is called as the brain of the computer. So, what it basically does is that it gathers some instructions from the memory and then executes them. So the fundamental cycle of a CPU is to gather the instructions from the memory decode it to understand its type and operands and finally execute it. This cycle of gathering decoding and executing is repeated until the program is finished.

Every CPU contains a specific set of instructions which it is able to execute. Therefore it is not necessary that each and every processor will be able to execute another processor's instructions.

Registers

All of the CPU's use registers to store temporary data because fetching and writing from the main memory takes a lot more time as compared to updating the registers. The general registers are used to store temporary results however some special registers are also used at the same time to store a specific set of information. Like for example one of the type of special register is a program counter.

Program Counter

Program counter is used to store the address of the next instruction which we have to fetch from the memory. After the first instruction is fetched the program counter is updated and now it stores the address of the second instruction.

Stack Pointer

Another Special type of register is stack pointer. It points to the top of the stack. Stack is a type of data structure that helps to store data in Last in First out manner. For example, if we try to store 3 variables in the stack variable A then variable B and at last variable C, then at the time of removal of these variables, variable C will be the first to be removed followed by variable B and at last variable A. It means if you store the variables in the stack in the order ABC then they will be removed in the order CBA i.e. Last In First Out.

Program Status Word

Another type of register is PSW which is short for Program Status Word this register consists of some condition code bits. These condition code bits depends upon the comparison instructions, the priority of the CPU, the mode in which it is running, (for example, user mode or kernel mode) and some other control bits. Usually, user programs read the whole PSW however they only write some of the fields. PSW is really important while making system calls and carrying input output operations.

It is necessary for the operating system to have knowledge about all these registers because normally during time multiplexing the CPU, the operating system first have to save all the registers of the currently running program so that whenever in future this program starts running again it does not need to start from absolute 0 and it can continue the work from where it left. Once the Operating system saves the registers, then finally the second program starts.

The fetch decode execute cycle

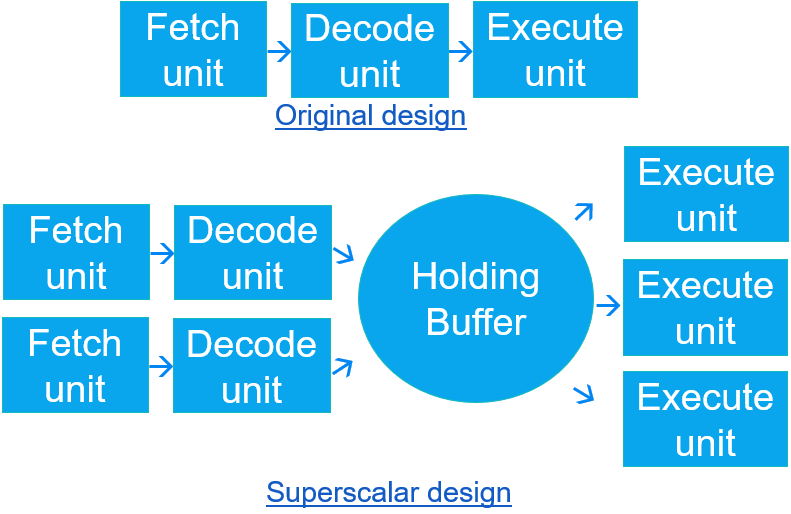

As we all know these days CPUs can now perform multiple tasks at once and therefore there has been an improvement in the original fetch decode execute cycle that we just saw. Exactly what the idea is that when the CPU is executing a process say A then at that time another part of the CPU will also be decoding a process B and at the same time also it will be fetching the next process C. The way this whole process works is termed as a pipeline which generally refers to, that before the first process ends the second process starts in some sort of way and thus it reduces the idle time of the CPU to a great extent.

Superscalar

Superscalar CPU is another pipeline design that is more advanced than the previous one. In this design, multiple instructions are fetched and decode together and then stored in a holding buffer. These instructions wait inside the holding buffer until the execution unit is free. Once the execution unit is free it fetches the instructions from the holding buffer and executes them. One problem with this design is that mostly the instructions are executed out of order that is they are not executed in the same sequence as they should be. Therefore it is the duty of the hardware to check that the instructions are executed in the same sequence. This complex duty of hardware is also shared by the operating system which we will talk about in the coming chapters.

Kernel mode and User mode

In the previous chapters, we talked about kernel mode and user mode in which we saw that kernel mode has full access to the hardware and also it can execute each and every instruction however the user mode cannot execute all of the instructions and relies on the kernel mode to give permission to act.

Normally input-output and memory instructions are not allowed in the user mode. So now for these instructions to be executed, the user program makes a system call to the operating system which traps into the kernel mode. The Trap in system call is used to switch from user mode to kernel mode once we have switched to the kernel mode the operating system is invoked and executes that particular instruction and after the instruction is executed the control is returned to the next instruction of the user program. We will discuss about system calls in detail later in the chapter but for the time being just think of the TRAP system call as a mechanism which helps to switch from user mode to kernel mode without jeopardizing the security.

Multithreaded and Multicore chips

Moore's Law

Moore's law states that in every 18 months the number of transistors on a chip gets doubled. This law is stated by Gordon Moore who is the co-founder of Intel it is his observation about how fast the engineers are able to shrink the transistors. There has been three decades and Moore's law has held true and it is expected that it will hold true for at least one more decade after that there may be a possibility that the number of atoms in a transistor will be too small and we need to take help from quantum mechanics.

The number of transistors that are increasing is a problem I mean what we should do with so many transistors? One thing that we can do is to use superscalar architectures which we have just seen in which we can use multiple functional units. Another thing we can do is that we can increase the cache size of the CPU. And this is also happening. The size of caches is increasing but if the transistors increased so fast, then even the caches will not able to help either.

So then the solution for this is that not only do we have to replicate the functional units, but we have to replicate to a large extent the control logic. This thing was first used in Intel Pentium 4 and we call it multithreading or hyperthreading. What happens here normally is that the CPU can hold 2 or more different threads and then it will switch between both these threads again and again in a nanosecond time. Now you must be wondering what this thread is, well we will see it in the chapters ahead, but for now you may think that it is a lightweight process.

Multithreading does not actually give parallelism, in the real sense. Because it runs only one process at a time, it is just the switching time between threads that we have reduced to a nanosecond.

Operating System and Multithreading

Let us discuss the connection between multithreading and the operating system. For example, if we have a processor which can hold two threads then this processor will look like two CPUs to the operating system because each and every thread looks like an individual CPU to the operating system. For example in a system that contains two different CPUs each having two threads then these processors will look like four different CPUs to the operating system the problem with this is that if we have a job in which we have to run two Threads, then it may be possible that the operating system assigns both the Threads to CPU 1 because in operating system's view these are different CPUs however in real sense they are not. This design will be inefficient because CPU 1 will be doing more job and at the same time CPU 2 will be completely idle and due to that execution will be slower. So it is better that the operating system assigns each thread to both of the CPUs.

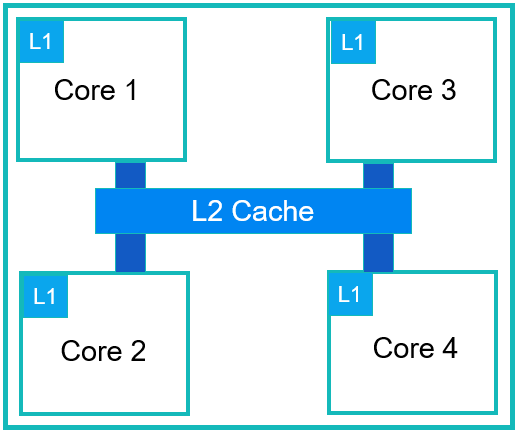

Apart from multithreading, these days most of the CPU chips come with 2, 4, 8 or more cores in it, we call them multicore chips. Each of the cores work like an individual Mini CPU. As you can see in the figure below this processor has four different cores with separate L1 cache and a shared L2 cache. We will talk about caches in the memory section.

Test Yourself

Take Free Quiz